Generic MettleCI Pipeline Description

Introduction

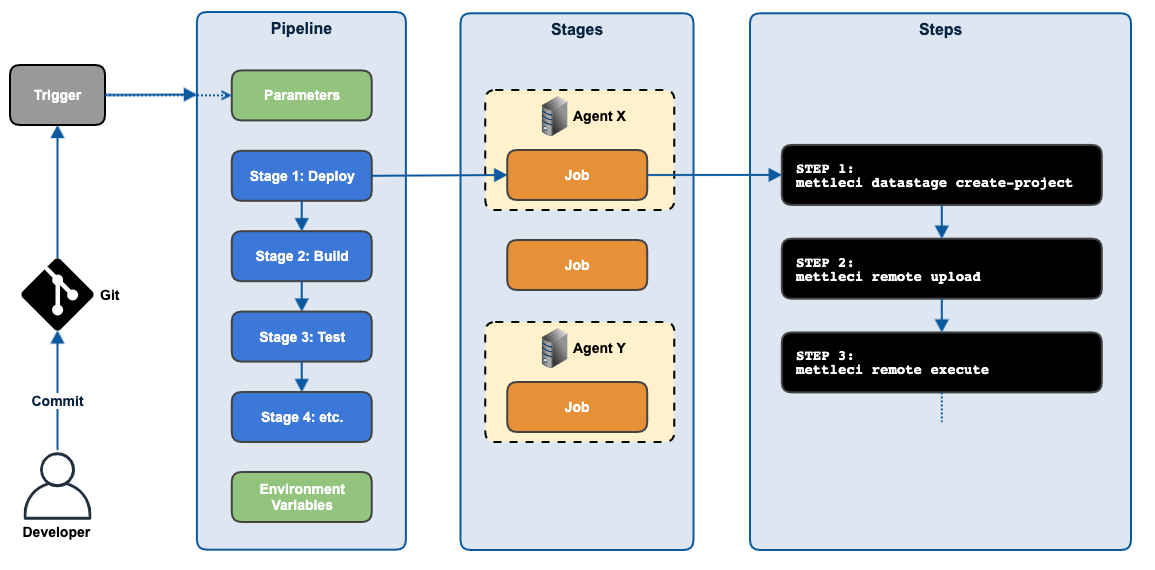

This section describes the overall structure and behaviour of a MettleCI pipeline in a build tool-agnostic manner. To start, we’ll introduce some terminology used when describing build pipelines. Note that this is a generic set of terms, and your build tool of choice may use some , all, or none of these when describing its components.

The terms we will use are:

Pipeline: A collection of Stages. A pipeline is the scripted sequence of operations that are triggered by your build tool’s trigger condition - typically a commit to Git.

Stage: A collection of Jobs. A stage represents a phase of your pipeline, like Deploy, Build, Test, and Promotion, for example. These phases normally run sequentially and are often dependent upon their predecessor stage.

Job: A collection of steps. Jobs are operations normally grouped by the type of resources required to perform them. One of these resources is a Build Agent (see below). Stages can be configured to run on specific build agents that are deployed on your networks in order to access to specific third-party capabilities, like a DataStage client. a MettleCI Command Line, or a test database, for example.

Step: The actual commands or operations that happen on network endpoints to deliver your pipeline’s desired outcome. Ultimately a pipeline is a collection of commands organised into Stages and Jobs to make their definition and management easier.

Pipelines also typically have some other inputs:

The Git change set: A reference to a Git repository and commits to that repository which triggered the pipeline.

Pipeline Parameters: Parameters

Environment Variables: Environment Variables

Build Tool Configuration

Agents

A build agent (agents in the past sometimes were referred to as ‘slaves’, but this usage is deprecated) is a piece of software which listens for the commands from your build tool server, and invokes the actual build processes defined in your pipeline. It is installed and configured separately from your build server. Even though it can be installed on the same computer as the build server, it is more commonly (and usefully) installed on a different machine, which may be running on a different OS to that of the build server.

A MettleCI pipeline comprises many steps which use the MettleCI Command Line Interface as well as some steps which require the capabilities of a Windows-based DataStage client, so you will need to configure a MettleCI Agent Host which contains an agent for your build tool, a DataStage client, and a MettleCI CLI. When you build your pipeline, you need to take appropriate measures to ensure your build tool executes the pipeline steps on the MettleCI Agent Host.

Parallelism

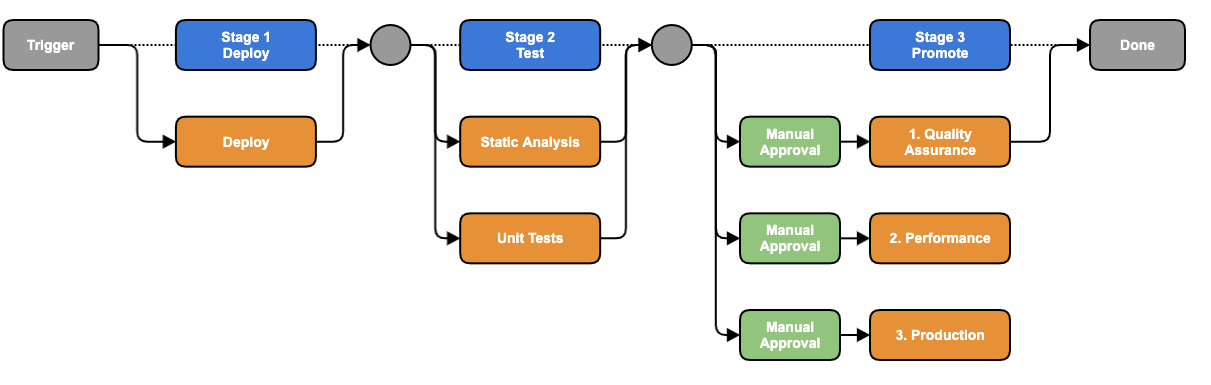

Many built tools permit activity to occur in parallel, normally at the Job level. In our example pipeline, the Test stage is configured to run the Static Analysis (Compliance) and Unit Testing Jobs in parallel, as there are no dependencies or resource conflicts between them.

Manual Approval Gate

Many build tools will permit the inclusion of manual approval gates at certain parts of your pipeline. These require a human to log in to the build tool and manually authorize the pipeline execution to proceed, commonly by clicking a button on the user interface. This process can also be used to prompt users for parameters that may effect the pipeline’s remaining steps. In our example pipeline the Promote Stage is configured for manual approvals before each each of the deployment jobs are executed. You can omit a manual approval gate to get your build tool to automatically deploy to a downstream environment upon successful completion of your Continuous Integration test, in which case you have implemented Continuous Deployment. If this automation encompasses releases to your production environment then you have implemented Continuous Delivery.

Pipeline Configuration

Structure

At a high level, a DevOps pipeline might be structured like this:

The pipeline we’ll be describing here is the MettleCI Continuous Integration ('CI') template pipeline that ships with MettleCI. This has three Stages, one of which (Test) runs its two jobs in parallel. This pipeline makes use of some runtime parameters and environment variables which are described below.

Parameters

A typical pipeline might have the following parameters and environment variables defined:

Name | Type | Description | Example |

|---|---|---|---|

datastageUsername datastagePassword | Credentials | MettleCI DataStage user credentials |

|

osUsername osPassword | Credentials | MettleCI Operating System user credentials |

|

complianceUsername compliancePassword | Credentials for accessing the Compliance repository |

| |

complianceURL | URL | Git endpoint to access your Compliance Repository |

|

domainName | String | The reference to your DataStage development environment's Services tier (host:port) |

|

serverName | String | The reference to your DataStage development environment's Engine tier (host) |

|

projectName | String | The name of your DataStage Development project usually acts as the root of subsequent names. e.g DEV: MyProject CI: MyProject_ci SIT: MyProject_sit UAT: MyProject_uat PROD: MyProject_prod |

|

environmentId | String | Environment Identifier |

|

Environment Variables

Name | Value | Description |

|---|---|---|

DATASTAGE_PROJECT |

| A convenience value to avoid having to reconstruct the CI project name every time it’s referenced. |

Pipeline Stages

For each of the three stages we provide an annotated example of the command that should be run. For every stage a set of cleanup steps should be configured to run every time the stage completes, regardless of whether the stage has completed successfully or not.

Stage 1 - Deploy

This stage…

Ensures the target DataStage project exists (creating it if not)

Takes a set of non-DataStage assets from your Git repository and substitutes values into them, using values appropriate to the deployment target environment

Uploads those non-DataStage assets and updated files, and then runs a script to provision them in the target environment

Imports your DataStage assets to the target DataStage project and compiles them

Reports compilation success as a test result to your build tool

Removes temporary artefacts on both the target DataStage Engine and on the MettleCI Agent Host

Job Steps

// Verify the existence of the target DataStage project (or create it if it doesn't)

mettleci datastage create-project

-domain $params.domainName -server ${params.serverName} -project $env.DATASTAGE_PROJECT

-username $params.datastageUsername -password $params.datastagePassword

// Perform a substitution of values in specified files.

// This example operates on *.sh files in the 'datastage' base directory, the DSParams file, and all Parameter Sets

// using values from the file 'var.ci' (as determined by the environmentId parameter)

// Substituted files are created in a new temporary build directory on your build agent host called 'config'

mettleci properties config

-baseDir datastage

-filePattern "*.sh"

-filePattern "DSParams"

-filePattern "Parameter Sets/*/*"

-properties var.$params.environmentId -outDir config

// This copies all files from your repository's 'filesystem' folder to your DataStage project directory on your DataStage Engine

mettleci remote upload

-host $params.serverName -username $params.osUsername -password $params.osPassword

-transferPattern "filesystem/**/*,config/*"

-destination "$env.DATASTAGE_PROJECT"

// Despite the original file being located in the 'datastage' directory this is being run from the 'config' directory as this

// is the output of the 'mettleci properties config' command which performed key/values subsitutions using the appropriate var.* file.

mettleci remote execute

-host $params.serverName -username $params.osUsername -password $params.osPassword

-script "config\deploy.sh"

// Run incremental ddeploy

// The 'project-cache' value references a Windows path as it is referring to a location in the build directory on the MettleCI Agent Host

mettleci datastage deploy

-domain $params.domainName -server $params.serverName -project $env.DATASTAGE_PROJECT

-username $datastageUsername -password $datastagePassword

-assets datastage -parameter-sets "config\Parameter Sets"

-threads 8

-project-cache "C:\dm\mci\cache\$params.serverName\$env.DATASTAGE_PROJECT}"Job Cleanup Steps

// Publish the jUnit compilation test results located under the 'log' directory

junit publish results 'log/**/mettleci_compilation.xml'

// Run the 'cleanup.sh' script on the DataStage Engine to tidy up your filesystem artifacts

mettleci remote execute

-host $params.serverName -username $datastageUsername -password $datastagePassword

-script "config\cleanup.sh"

// Tidy up the agent's working directory to maintain disk hygiene and capacity

delete working dirStage 2 - Test

This stage runs two jobs in parallel:

Static Analysis (Compliance)

Fetch your compliance rules from a nominated Git repository

Run those rules against your DataStage assets

Publish compliance outputs as test results to your build tool

Unit Testing

Upload Unit Test artefacts to your target DataStage environment

Run your DataStage jobs (for which a Unit Test is available) in Unit Test mode

Fetch Unit Test result files from your target DataStage environment to your MettleCI Agent Host (where the build agent executes)

Publish Unit Test outputs as a test result to your build tool

Job 1 Steps - Static Analysis

// Perform a 'git checkout' of a remote repository which is NOT the repository from which this pipeline code was sourced

checkout

branches:'master',

username: $params.complianceUsername,

password: $params.compliancePassword,

repositoryURL: $params.complianceURL

// Executes the 'mettleci compliance test' command to test your repository's DataStage jobs against your Compliance rules.

// Note that 'mettleci compliance test' is mnot the same as 'mettleci compliance query' - See the documentation for more details.

mettleci compliance test

-assets datastage

-report "compliance_report_warn.xml"

-junit

-test-suite "warnings"

-ignore-test-failures

-rules compliance

-project-cache "C:\dm\mci\cache\$params.serverName\$env.DATASTAGE_PROJECT"Job Cleanup Steps

// Publish compliance outputs as test results to your build tool

junit testResults: 'compliance_report_*.xml'Job 2 Steps - Unit Tests

// mettleci remote upload

// Uses DataStage engine tier operating system credentials

mettleci remote upload

-host $params.serverName -username $osUsername -password $osPassword

-source "unittest"

-transferPattern "**/*"

-destination "/opt/dm/mci/specs/$env.DATASTAGE_PROJECT"

// mettleci unittest test

// Uses DataStage user credentials

mettleci unittest test

-domain $params.domainName -server $params.serverName

-username $datastageUsername -password $datastagePassword

-project $env.DATASTAGE_PROJECT

-specs unittest

-reports test-reports

-project-cache "C:\dm\\mci\cache\$params.serverName\$env.DATASTAGE_PROJECT"

// mettleci remote download

// Uses DataStage engine tier operating system credentials

mettleci remote download

-host $params.serverName -username $osUsername -password $osPassword

-source "/opt/dm/mci/reports"

-transferPattern "$env.DATASTAGE_PROJECT/**/*.xml"

-destination "test-reports"Job Cleanup Steps

// Publish Unit Test outputs as a test result to your build tool

junit publish results 'test-reports/**/*.xml'

// Clean up working directories.

// This is particularly important as unit test data files can potentially occupy significant disk space.

deleteDir()Stage 3 - Promotion

This stage is essentially a repeat of the initial Stage 1 (Deploy) but without the testing elements. It performs the following actions:

Ensures the target DataStage project exists (creating it if not)

Takes a set of non-DataStage assets from your Git repository and substitutes values using values appropriate to the deployment target environment

Uploads those non-DataStage assets and updated files and runs a script to provision them in the target environment

Imports your DataStage assets to the target DataStage project and compiles them

Removes temporary artefacts on both the target DataStage Engine and on the MettleCI Agent Host

Parameters

The promotion stage is unique among the pipeline stages in that is effectively a set of identical jobs with the only difference being their runtime parameters which override many of the parameters used for previous stages.

Once those refreshed parameters are modified the pipeline will need to re-calculate any environment variables dependent upon them. e.g.

DATASTAGE_PROJECT = $params.projectName + "_" + $params.environmentIdJob Steps

// Verify the existence of the target DataStage project (or create it if it doesn't)

mettleci datastage create-project

-domain $params.domainName -server ${params.serverName} -project $env.DATASTAGE_PROJECT

-username $params.datastageUsername -password $params.datastagePassword

// Perform a substitution of values in specified files.

mettleci properties config

-baseDir datastage

-filePattern "*.sh"

-filePattern "DSParams"

-filePattern "Parameter Sets/*/*"

-properties var.$params.environmentId -outDir config

// UPload all files from 'filesystem' folder to DataStage Engine project directory

mettleci remote upload

-host $params.serverName -username $params.osUsername -password $params.osPassword

-transferPattern "filesystem/**/*,config/*"

-destination "$env.DATASTAGE_PROJECT"

// Execute remote provisioning script

mettleci remote execute

-host $params.serverName -username $params.osUsername -password $params.osPassword

-script "config\deploy.sh"

// Run incremental deploy

mettleci datastage deploy

-domain $params.domainName -server $params.serverName -project $env.DATASTAGE_PROJECT

-username $datastageUsername -password $datastagePassword

-assets datastage -parameter-sets "config\Parameter Sets"

-threads 8

-project-cache "C:\dm\mci\cache\$params.serverName\$env.DATASTAGE_PROJECT}"Job Cleanup

mettleci remote execute

-host ${serverName} -username ${osUsername} -password ${osPassword}

-script "config\cleanup.sh"

deleteDir()